From GI Roundtable 4: Are Opinion Polls Useful? (1946)

It is generally agreed that the usefulness of public opinion polls depends on the reliability of their results. Many students believe that polling has proved a reliable guide to what Americans think or how they feel about certain issues and that the results obtained when a sample of the population is interviewed may be “blown up” to indicate what all Americans think or feel. On the other hand, some students of opinion polls declare that this method of obtaining national views cannot attain great accuracy because the sample, no matter how carefully selected, will not reflect the entire range of individual ideas or feelings that characterize 138,000,000 Americans.

Polling, however, is not an exact science. Since only samples of the population are interviewed, final figures are always subject to a margin of error. The sampling method cannot produce exact figures like those found in the laboratory of the chemist or the physicist. People of a community cannot be subjected to precise analytical measurements. Exactly what accounts for an opinion held by a person or for his personality cannot be isolated and studied as precisely as the elements which make up a chemical compound.

Nevertheless, if properly done, polls are said to result in figures which are very close to the nation-wide average. This belief has to some extent been borne out by sampling the voters before an election. Unless the division of opinion is about 50–50, with a good poll the figures can be expected to call the turn on the winner or the popular side of the issue. A candidate getting about 53 percent or more of the vote can be picked to win without much question.

Does chance enter in?

Mere chance accounts for the normal margin of error. These chance factors have been studied under what is known as the “theory of probabilities.” With a little patience anyone can test this theory, using dice or pennies. And very few crapshooters keep their shirts who haven’t a working knowledge of this law—though they may call it intuition!

Suppose you decide to find out how many heads and tails you will get if you toss four pennies at the same time. The pennies have only two sides and it can be proved that you are more likely to get 2 heads and 2 tails than any other combination. That is, you can expect to get 4 heads or 4 tails from one-sixteenth of the throws, 3 heads and 1 tail or 3 tails and 1 head from one-fourth of the throws, and 2 heads and 2 tails three-eighths of the time.

Suppose, however, that when you toss the pennies the first time you get 4 heads. You toss them the second time and maybe, to your surprise, you get 4 heads again! Of course you wouldn’t come to the conclusion that the pennies have only heads and no tails. You not only know otherwise, but know that you haven’t tossed the pennies often enough to be sure of such a conclusion. Your sample isn’t big enough. But if you toss the four pennies over and over again, recording the combination of heads and tails you get after each toss, you will find that you get closer and closer to the figures you can expect to get theoretically. The margin of error will continue to decrease as the number of tosses (sample) increases. If you keep up the tosses until you have made 1,000 or more, you should get the expected results within a small margin of error.

The same rules and similar chances of error operate when you carefully sample the population in an opinion survey. In other words, even if the sampling and interviewing have been perfectly carried out, it can be proved by mathematics that chance can cause small margins of error. For example, it can be shown that in a sample of 1,000, taken at random, the chances are 95 out of 100 that the error will be less than 3 percentage points, although in the other 5 times the error may be larger. The chances are 99 out of 100, however, that the error will be less than 4 percent. If the sample is 2,500, taken at random, the margin of error is about 2 percent in the first case, and about 3 percent in the second. This means that if the same questions were asked of the whole population, the figures would be likely to fall within a few percent of those obtained by a careful use of the sampling method. The risk is small. It is considered a reasonably safe gamble.

Are the differences significant?

It follows that if two or more polls get answers to the same questions which differ by no more than these normal errors they have, for all practical purposes, achieved the same results. It would be mere chance, however, if they came out exactly the same percentages. If the polls announce results which differ by a larger margin than the normal error permits, then the difference is significant, and is caused by some other reason besides chance. The samples may not have been equally true cross sections of the population, or the questions may have been worded differently in each poll.

The champions of polling methods say that the evidence is strong that the major polls get the same results for all practical purposes. Moreover, their figures tend to vary less and less from true figures—such as election returns as years go by. In 1936 the Gallup Poll was 6 percent wide of the actual percentage division of the votes cast for Landon and Roosevelt; it was 4.5 percent off the mark in 1940, and less than 2 percent off in 1944. However, in 1944 the Gallup Poll was much more off the mark in guessing the outcome of the electoral votes. It indicated a fairly close race—so far as electoral votes are concerned—between Roosevelt and Dewey, but actually Roosevelt obtained 432 votes and Dewey only 99. Thus, its state sampling was much less accurate than its national sampling.

The Fortune (Elmo Roper) Poll has varied only 1 percent from the results in each of these elections.

The pollers claim that these results are better than more haphazard methods used in the past. They far surpass, for example, the Literary Digest poll of 1936 which was not based on a carefully selected cross section of the population and which resulted in an error of 19 percent. That error helped to end the existence of the Literary Digest.

To summarize the means by which one can try to tell the difference between reliable and unreliable polls:

- Does the polling organization explain its procedures to the public so that anyone can determine whether it follows reliable practices? Is the organization willing to submit its data to impartial analysis?

- The reader should examine carefully the questions and results of polls which come to his attention. Are the questions neutral in tone or are they “loaded”? Do the questions deal with real and present situations? Are they merely intended to bring out opinions on the basis of assumptions? Do the questions and answers deal with past, present, or future situations?

- Who is sponsoring the polls? Is the sponsor an organization interested, for example, in research or in the welfare of the general public? Or does the organization gather data furthering the interests of some special group?

- If the poll is conducted by a private organization, does it depend for its existence on special interests? Does it depend for its existence on support from the general public? The major polls can maintain public confidence only so long as they continue to be accurate within the limits of chance errors.

- Do the privately owned polls which regularly announce their findings undergo an audit or checkup of their results? At least two organizations have given funds for the study of modern polling methods. The funds are used partly for research and analyzing figures supplied by the major polls.

- Does the poll usually attempt to go beyond simple yes-no questions? Does it try to arrive at an understanding of the public’s attitude on questions? In addition to giving figures on mere division of opinion, does it try to find (a) what interest the public has in the issue, (b) what information the public has on the issue, (c) what reasons people have for their viewpoints, and (d) how intensely people feel about the issue?

How US Public Opinion in Prewar Shifted in Response to Events Abroad

| YES | NO | DON’T KNOW | |

|---|---|---|---|

| January 1936 | |||

| Would you be willing to fight or have a member of your family fight ... | |||

| ... in case we were attacked on our own territory? | 80.3% | 15.6% | 4.1% |

| ... in case the Philippines were attacked? | 23.8% | 66.8% | 9.4% |

| … in case a foreign power tied to seize land in Central or South America? | 17.4% | 73.8% | 8.8% |

| … in case our foreign trade were seriously interfered with by force? | 34.4% | 53.8% | 11.8% |

| July 1937 | |||

| Do you believe there is likely to be a major European or Asiatic war in the next two or three years? | 46.9% | 29.2% | 23.9% |

| If yes, do you think the US is likely to be drawn in? | 46.6% | 36.6% | 16.8% |

| December 1939 | |||

| Favor entering war now | 2.5% | ||

| Fight only if Germany seems likely to win unless we did | 14.7% | ||

| Favor policy of economic discrimination against Germany | 8.9% | ||

| Favor maintaining strict neutrality | 67.4% | ||

| Find some way to support Germany | .2% | ||

| Other | 2.4% | ||

| Don’t know | 3.9% | ||

| August 1941 | |||

| Those who think this is our war are wrong, and the people of this country should resist to the last ditch any move that would lead us further toward war. | 16.3% | ||

| A lot of mistakes have brought us close to a war that isn’t ours, but now it’s done, and we should support, in full, the Government’s program. | 22.4% | ||

| While at first it looked as if this wasn’t our war, it now looks as though we should back England till Hitler is beaten. | 41.3% | ||

| It’s our war as well as England’s, and we should have been in there fighting with her long before this | 12.4% | ||

| Don’t know | 7.6% | ||

| For military intervention | 53.7% |

Related Resources

September 7, 2024

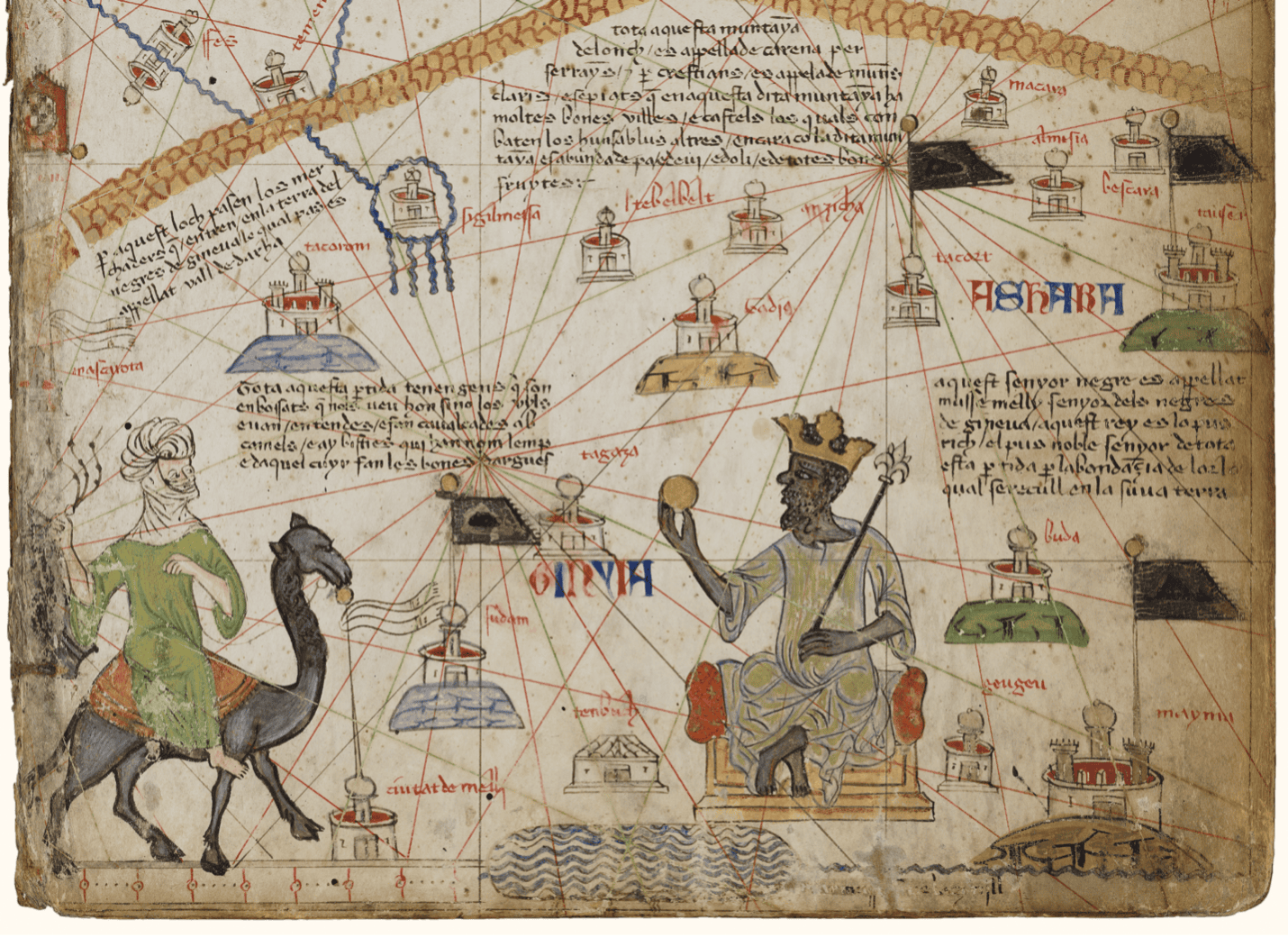

Travel and Trade in Later Medieval Africa

September 6, 2024

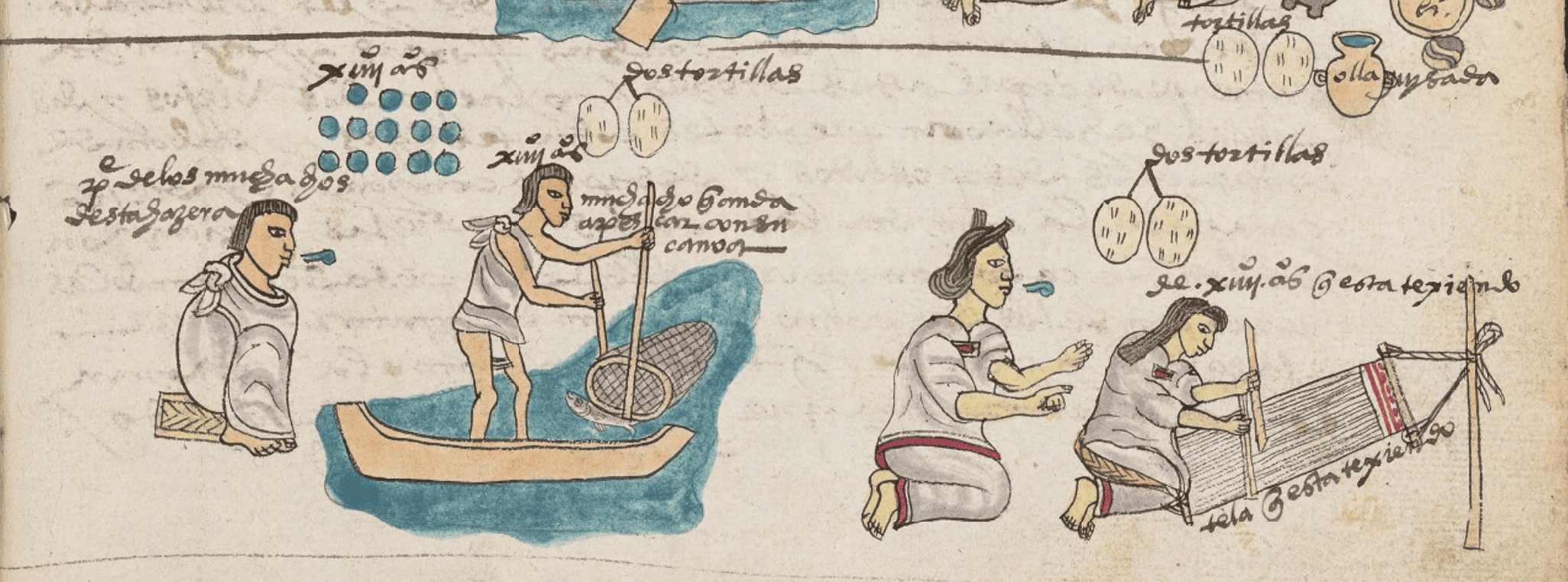

Sacred Cloth: Silk in Medieval Western Europe

September 5, 2024