The first time I ran into the idea that history degrees should have assessable outcomes was in 1996. A new department head, I was engaged in writing the department’s self-evaluation for re-accreditation. Filling in the endless form, I came to the demand “what are your outcomes and how do you measure them?” I blithely wrote: “Historians do not measure their effectiveness in outcomes.” For that, I was publicly denounced as “obstructionist” by the psychologist in charge of the process. He wanted concrete outcomes, measurable in psychometrics.

I now believe outcomes can be the historian’s friend. Still department head, I have served on review and accreditation teams for other departments and universities, and I have become deeply involved in issues of transfer and accreditation as the chair of the Utah Regents’ Task Force on General Education. These experiences have taught me that history programs do have outcomes that are assessable, and that we do not need the external validation provided by accrediting agencies such as NCATE and ABET. What we can and must articulate are the values and processes of our degree in ways that external audiences can understand, and outcomes assessment can help us do it.

Our opportunity to shape the conversation about outcomes and assessment is obvious: although accrediting agencies can insist that we do it, they cannot tell us what good ones look like. As I was told at an accreditors’ workshop, as long as assessment is done, no judgment on quality need be made. Therefore, the definitions are ours to form.

Departments grappling with assessment need to undertake a three-part exercise. First, there must be a conversation about the goals of the degree. If we ask why we bother to teach history, a general list will quickly develop and it will include the usual sweeping generalizations. My department’s list of aims at Utah State is representative:

- To train undergraduates to research, analyze, synthesize, and communicate accurate conclusions about change over time by using the historical method.

- To prepare history majors to succeed in history-related fields of endeavor such as museum curation, archival curation, heritage tourism, cultural resource management, or any other field that requires information retrieval and analysis skills.

- To inculcate cultural literacy by giving students a broad familiarity with the past.

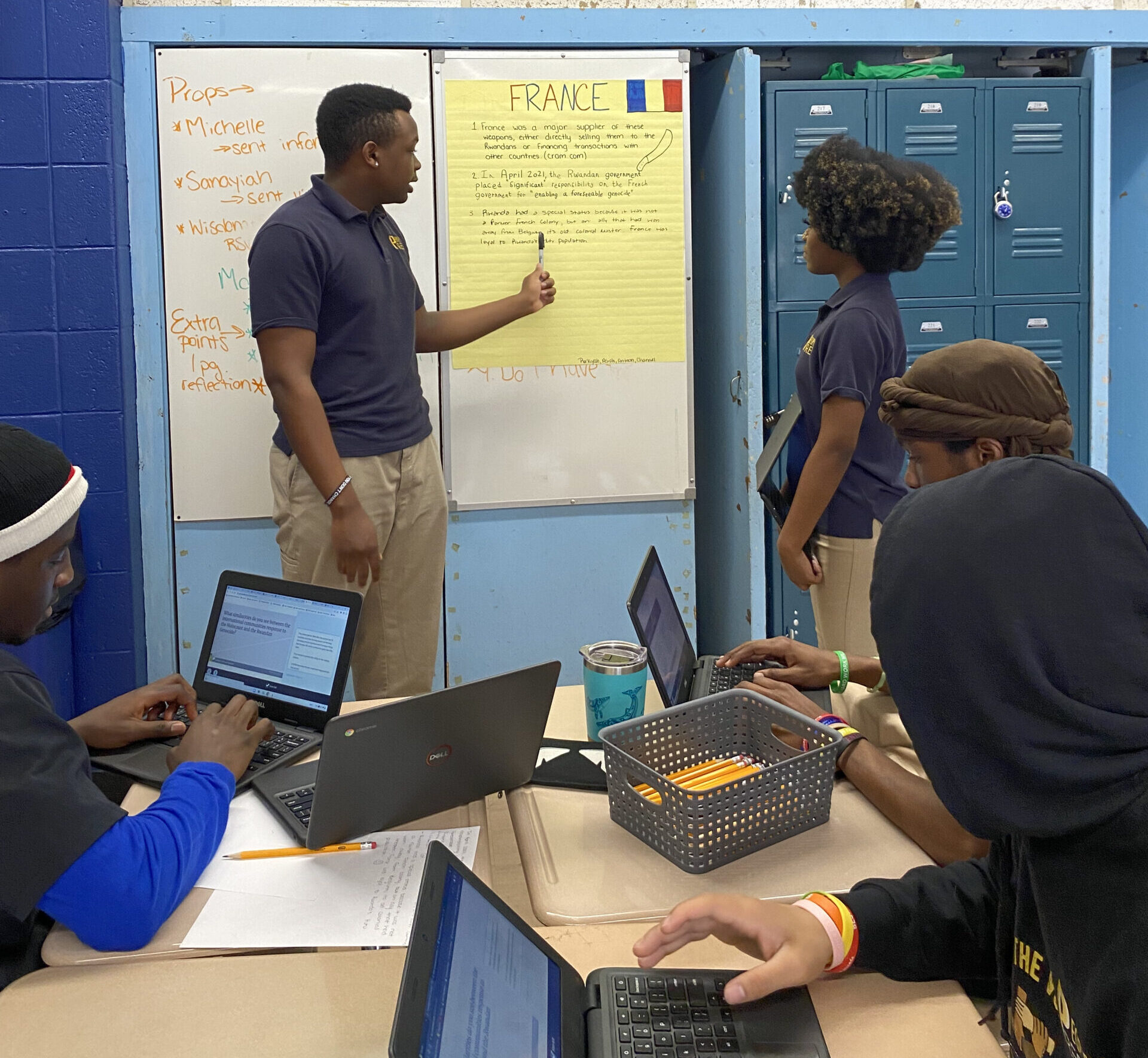

The second conversation needs to ask how these goals are achieved in practice. Here, we are talking about outcomes, and these generally take two broad forms, content knowledge and process experience. Are the majors being taught an appropriate chronological, geographical, and methodological range? Are they being taught to form historical questions, research from primary sources, and argue effectively in writing? Are we expecting increasing levels of sophistication? Once you have identified these outcomes, you might ask “how do we know we are teaching in ways that prepare students to demonstrate these outcomes?” One useful approach is to create a matrix that allows you to map courses and expectations onto learning outcomes, showing where and how various goals are achieved. This exercise is about making explicit to your department (and your students) what is usually implicit in your approach to teaching. Since all assessment, to be useful, must study what you actually teach, you should only map your real curriculum, and, if you discover holes in it, fill them. Fixing things because of assessment activities gives you greater cachet with accreditors. Moreover, if you tell your students what you learn about the functioning of your curriculum, you can improve their performance and raise your expectations at the same time.

Identifying goals and outcomes is the easy bit. We are voicing what we already do. The scary third part is the assessment. It is frightening because there is seldom a known audience. Depending on your institutional type, you might be trying to provide assessments to please legislators, donors, trustees, regents, chancellors, presidents, provosts, deans, transfer and articulation specialists, your colleagues on promotion and tenure committees, parents, students, newspaper reporters, and even regional accreditors. Our institutions and systems send conflicting instructions, too, which makes things even worse. Are we to assess in order to inform consumers, as the Spellings Commission thought, or do we assess to improve our teaching? And how is the answer to be expressed? In my own jaundiced opinion, the answer can be nothing more than a meaningless number—Douglas Adams’s “42,” which is the answer to life, the universe, and everything, comes to mind—as long as the “consumers” are assured that it is arrived at according to scientific principles (42 can be derived using base 13, which is likely to stop all criticism dead).

But if 42 is the answer, we might as well use the opportunity to do assessment that actually informs and improves our practice. Consequently, we are professionally free to assess according to the standards of our discipline. We do it when we grade, and the assessment of degree outcomes takes us to the next level of analysis. The course has a purpose and evidence of successful completion. So does the degree.

There are many ways of conducting assessment of a degree. Being historians, we tend to prefer qualitative analysis over quantitative. No mere number, even 42, will please us, so we need other approaches. One of the most common is the use of a capstone course. Most of us have senior seminars, senior theses, or similar activities for which students are expected to conduct and write up a piece of research according to professional standards. Those papers can be the raw material for a meaningful assessment. The department, or a subcommittee of the lucky, can read a set and report on what they learn about students’ achievement. You can even require students to place samples of their work into electronic portfolios, so that a pattern of development can be seen. In the best of all possible worlds, Dr. Pangloss and his assessor colleagues might invite each student to write a reflective essay about his or her experience in the major, relative to the explicit goals and outcomes of the department.

Of course, it helps if you teach your students about the goals and methods of the department, making them informed participants in the process. There are various ways of doing this. Perhaps the easiest is to insist that syllabi identify the role each course plays in meeting the goals of the department. This will make the course exams part of the assessment of the goals, while teaching faculty and students to think about their degrees in more sophisticated ways.

Questionnaires can be added as a further teaching tool. My department samples new majors and graduating majors, asking them questions about their expectations and experiences.

Of course, all of this is open to the criticism that any assessment that is internal may be biased, but that is only a problem if you believe that you must prove that your “culture of assessment” has produced “continuous improvement” until it reaches the ethereal sum of 42. If, on the other hand, you are doing this to learn about your department’s curriculum and its effect on majors, the conversation is what is important, not the number. If your administration wants more complex assessment, you might suggest that the papers, exams, and other things you have collected from your students can be externally validated according to the standards of the discipline of history. British universities do this all the time, with external assessors reading the papers of graduates and reporting on strengths and weaknesses apparent in the students. However, do not suggest this unless you know your dean will pay for external assessors!

An easier way to defend our own degree assessments is to plug our departments into the international conversation about history assessment going on in 46 nations of Europe, Latin America, Central Asia, and Australasia. The European Union’s Bologna Process is seeking to bridge the gaps between national educational systems by defining what experiences make a strong degree. History was chosen as a subject universally taught, and “tuning committees” in every nation involved have been charged with identifying the common goals and processes that make history degrees distinct forms of academic preparation. The intention is to produce an internationally recognized and validated set of outcomes for the history degree. To get some idea of what they are doing, look at the summary provided by Joaquim Carvalho and the benchmark statements for history in the United Kingdom and the Libro Blanco: Titulo de Grado en Historia from Spain.1 For the larger context try CLIOHnet, and the recent work of Clifford Adelman.2

As Adelman points out, one of the reasons we Americans are having such trouble defining our outcomes and assessments is that our system of transfer and articulation refuses to ask about course content and method. An invention of Morris L. Cooke in his 1910 book Academic and Industrial Efficiency the student credit hour became an efficient tool for transfer because it had a static definition that assumed standardized educational outputs and faculty workloads. It did not establish overall degree outcomes, except to say that it took 120 hours to graduate.

Our current angst about outcomes and assessment grows out of the long-established custom of measuring the curriculum by hours spent in seats rather than the learning achieved by students. We as teachers, straitjacketed by credit hours, have spent much of our lives shoehorning content and method into arbitrary blocks of seat time. The demand for outcomes assessment should be seized as an opportunity for us to actually talk about the habits of mind our discipline needs to instill in our students. It will do us a world of good, and it will save us from the spreadsheets of bureaucrats who wish us to show continuous improvement until we score 42.

Notes

- Joaquim Carvalho, “Tuning and the History Subject Area,” https://eden.dei.uc.pt/~joaquim/homepage/downloads/files/TuningAndHistory.pdf. [↩]

- Clifford Adelman, The Bologna Club: What U.S. Higher Education Can Learn from a Decade of European Reconstruction (2008). See also “On Accountability, Consider Bologna,” https://www.insidehighered.com/news/2008/07/28/accountability-consider-bologna. [↩]

Norman Jones is professor in the department of history (which he chairs) at Utah State University. He is currently a senior visiting research fellow at Oxford University’s Jesus College.